Text-driven style transfer aims to merge the style of a reference image with content described by a text prompt. Recent advancements in text-to-image models have improved the nuance of style transformations, yet significant challenges remain, particularly with overfitting to reference styles, limiting stylistic control, and misaligning with textual content. In this paper, we propose three complementary strategies to address these issues. First, we introduce a cross-modal Adaptive Instance Normalization (AdaIN) mechanism for better integration of style and text features, enhancing alignment. Second, we develop a Style-based Classifier-Free Guidance (SCFG) approach that enables selective control over stylistic elements, reducing irrelevant influences. Finally, we incorporate a teacher model during early generation stages to stabilize spatial layouts and mitigate artifacts. Our extensive evaluations demonstrate significant improvements in style transfer quality and alignment with textual prompts. Furthermore, our approach can be integrated into existing style transfer frameworks without fine-tuning.

Our solution is designed to be fine-tuning free and can be combined with different methods.

Cross-Modal AdaIN: We propose a novel method for text-driven style transfer that better integrates both text and image conditioning. Our approach aims to achieve a balanced fusion of these two conditioning inputs, ensuring that they complement each other effectively.

Building on AdaIN, we develop a cross-modal AdaIN mechanism that integrates text and style conditioning in a way that respects their distinct roles. We apply AdaIN between two feature maps, where the feature maps queried by text conditions are normalized by style feature maps.

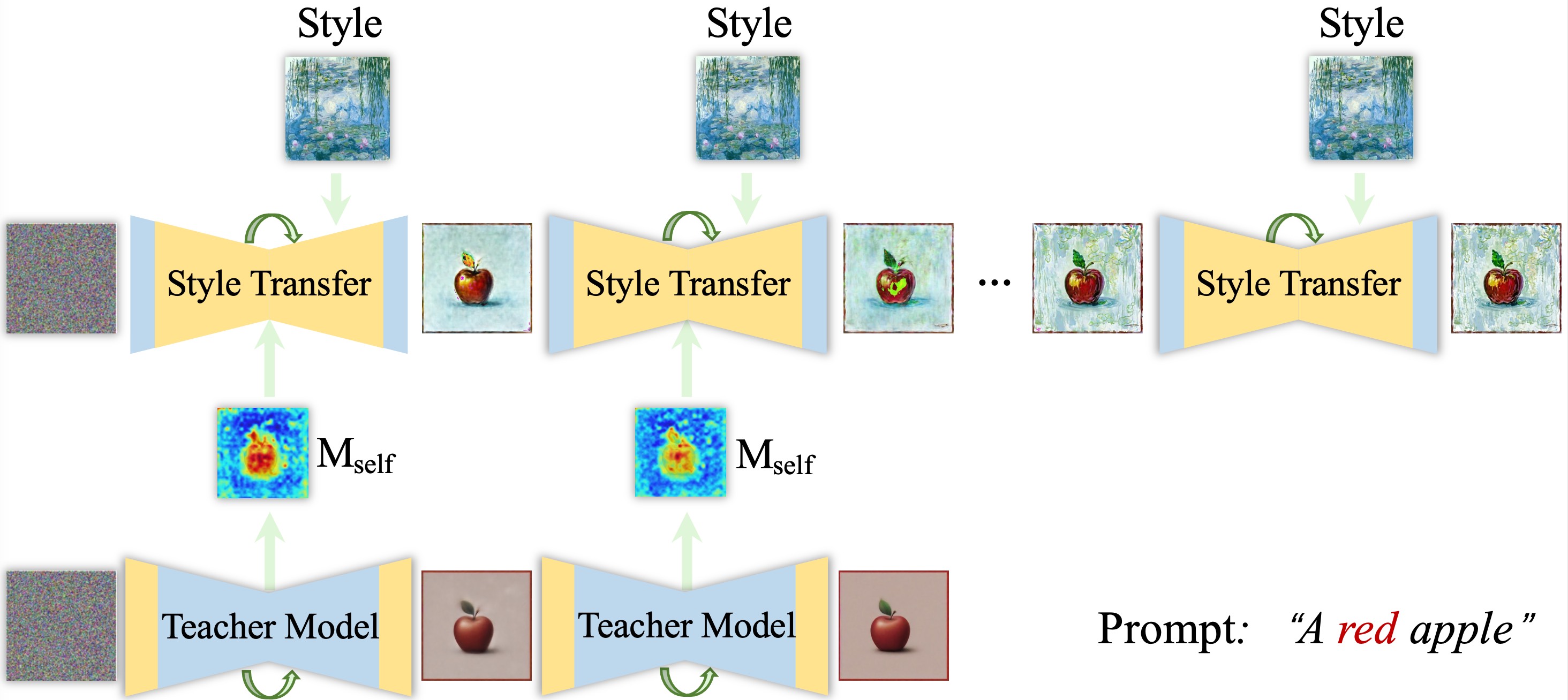

Teacher Model: we propose a method that stabilizes the layout by selectively replacing certain Self-Attention Attn-Maps in the stylized image with those from the original diffusion model. During the denoising generation process, the Teacher Model (e.g., SDXL) ensures layout stability, guiding the style transfer model to preserve the structure while applying the desired stylistic transformation.

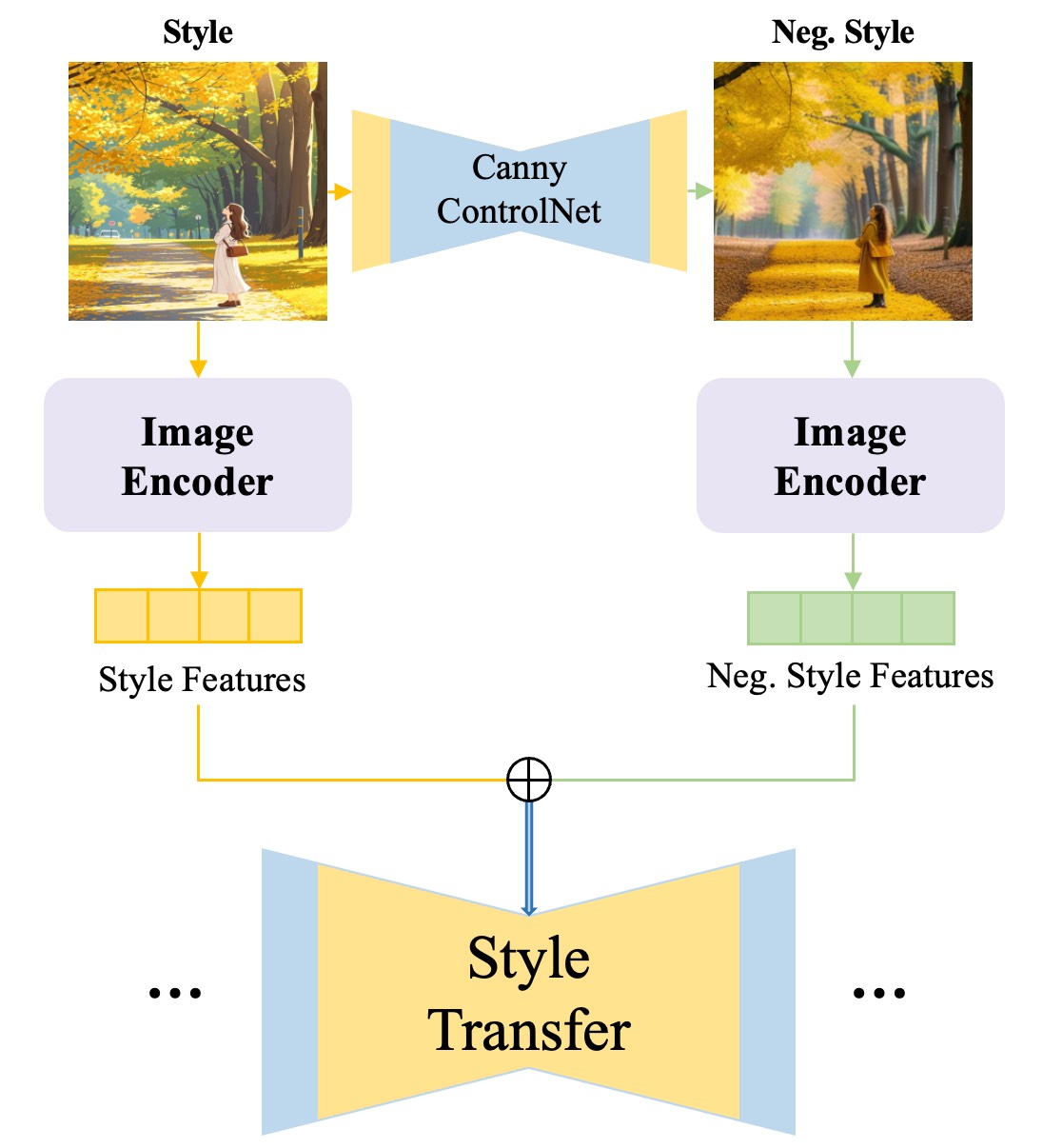

Style-Based CFG: we utilize a layout-controlled generation model, such as ControlNet, which allows us to create an image that preserves the structural features of the reference image but omits the target style. This approach mitigates the risk of overfitting to irrelevant style components.

CSGO employs a widely used adapter-based model structure and is the first method trained on a meticulously curated dataset specifically designed for style transfer. In the experimental section, we selected it as the baseline and implemented specific modifications based on it.

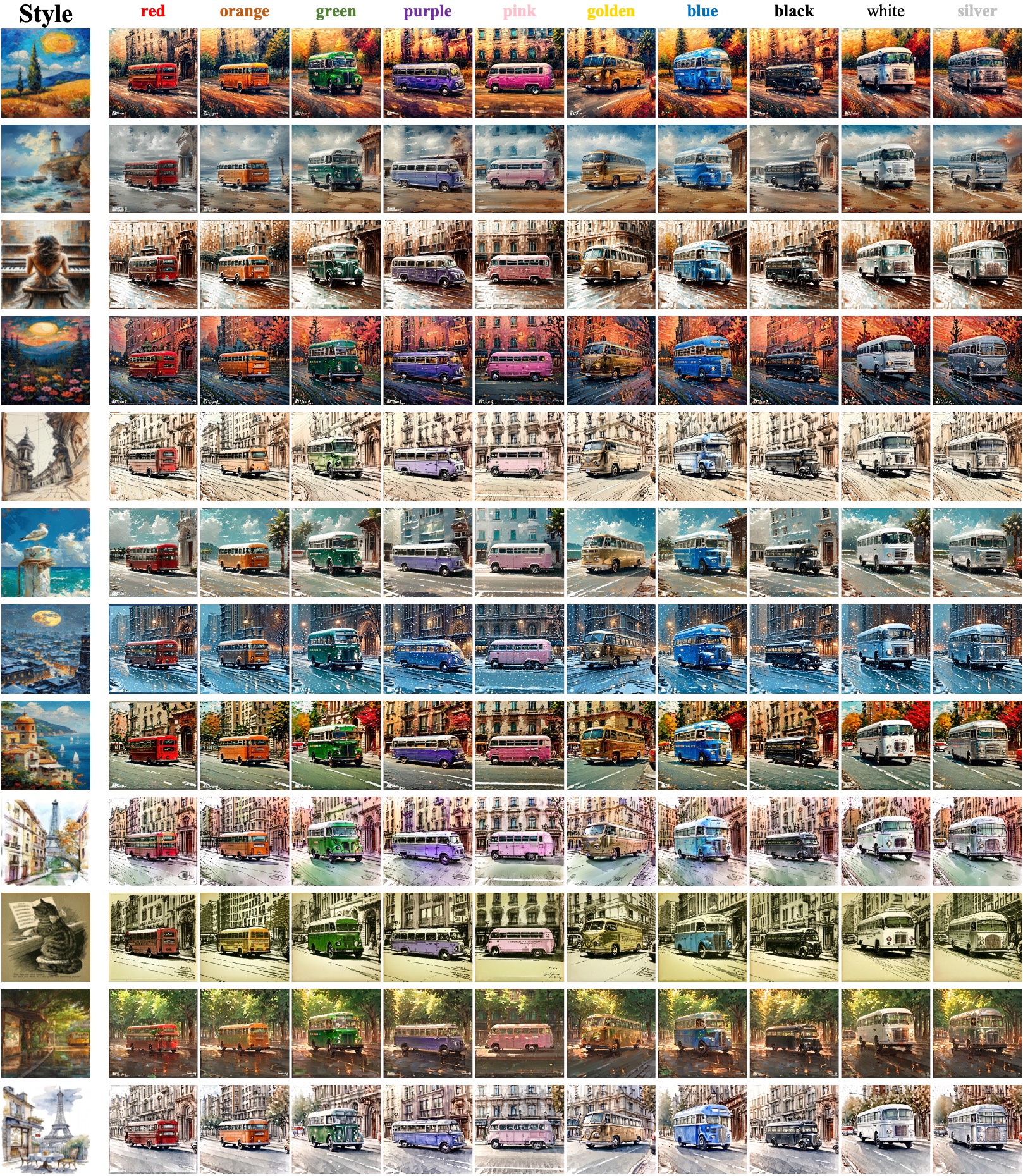

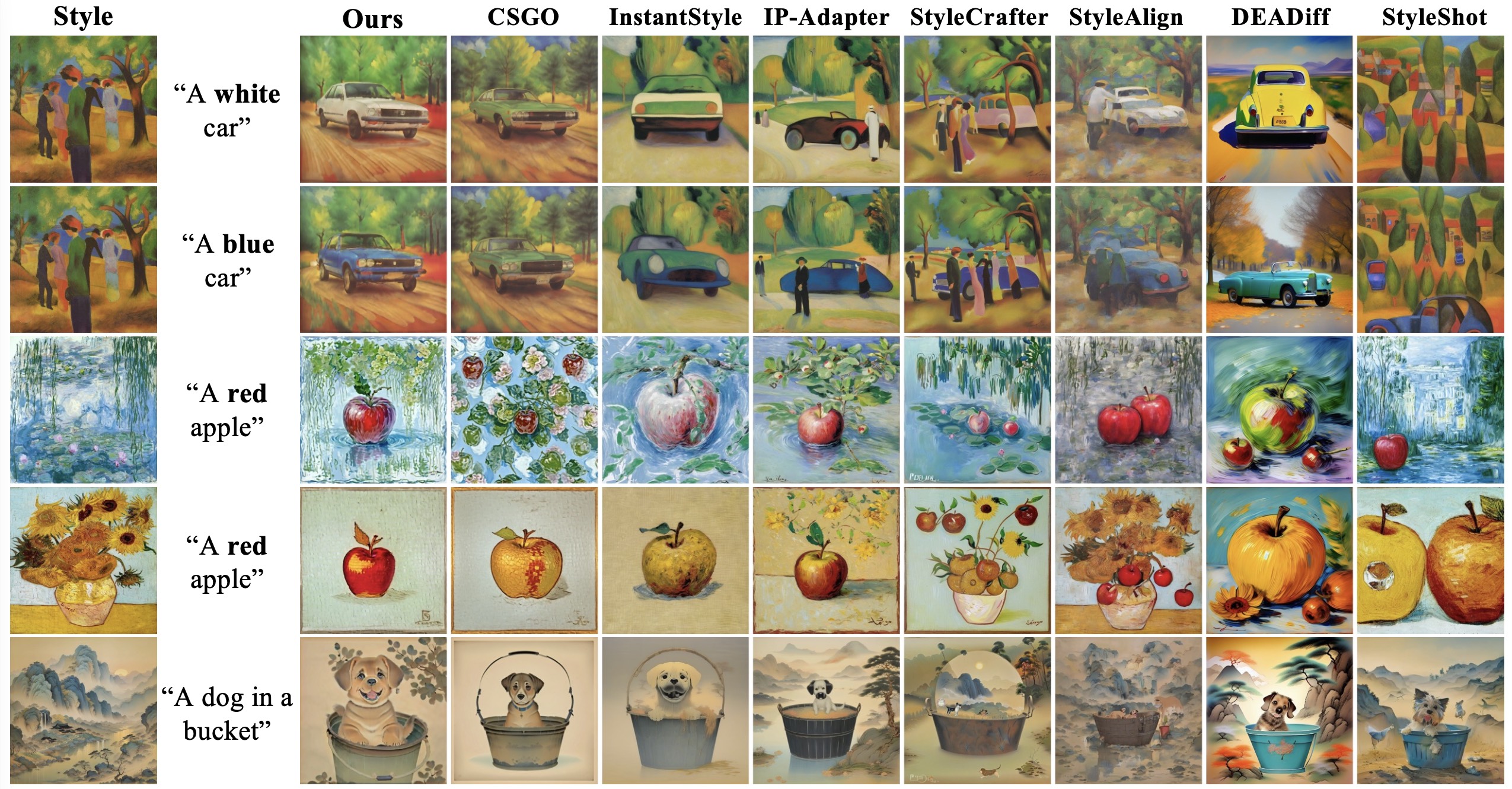

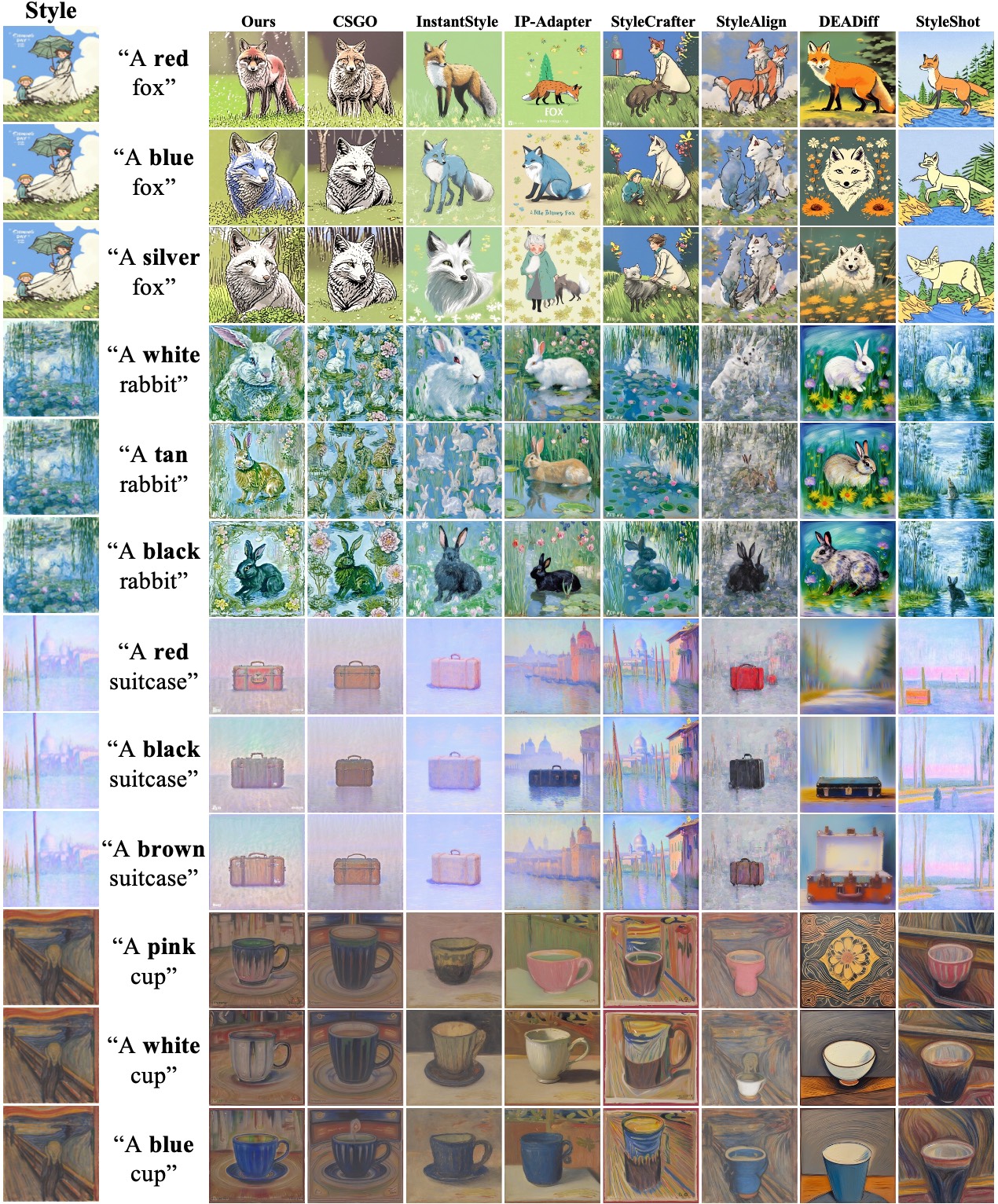

More results of our text-driven style transfer model. Given a style reference image, our method effectively reduces style overfitting, generating images that faithfully align with the text prompt while maintaining consistent layout structure across varying styles. Illustration of the prompt format used: "A [color] bus".

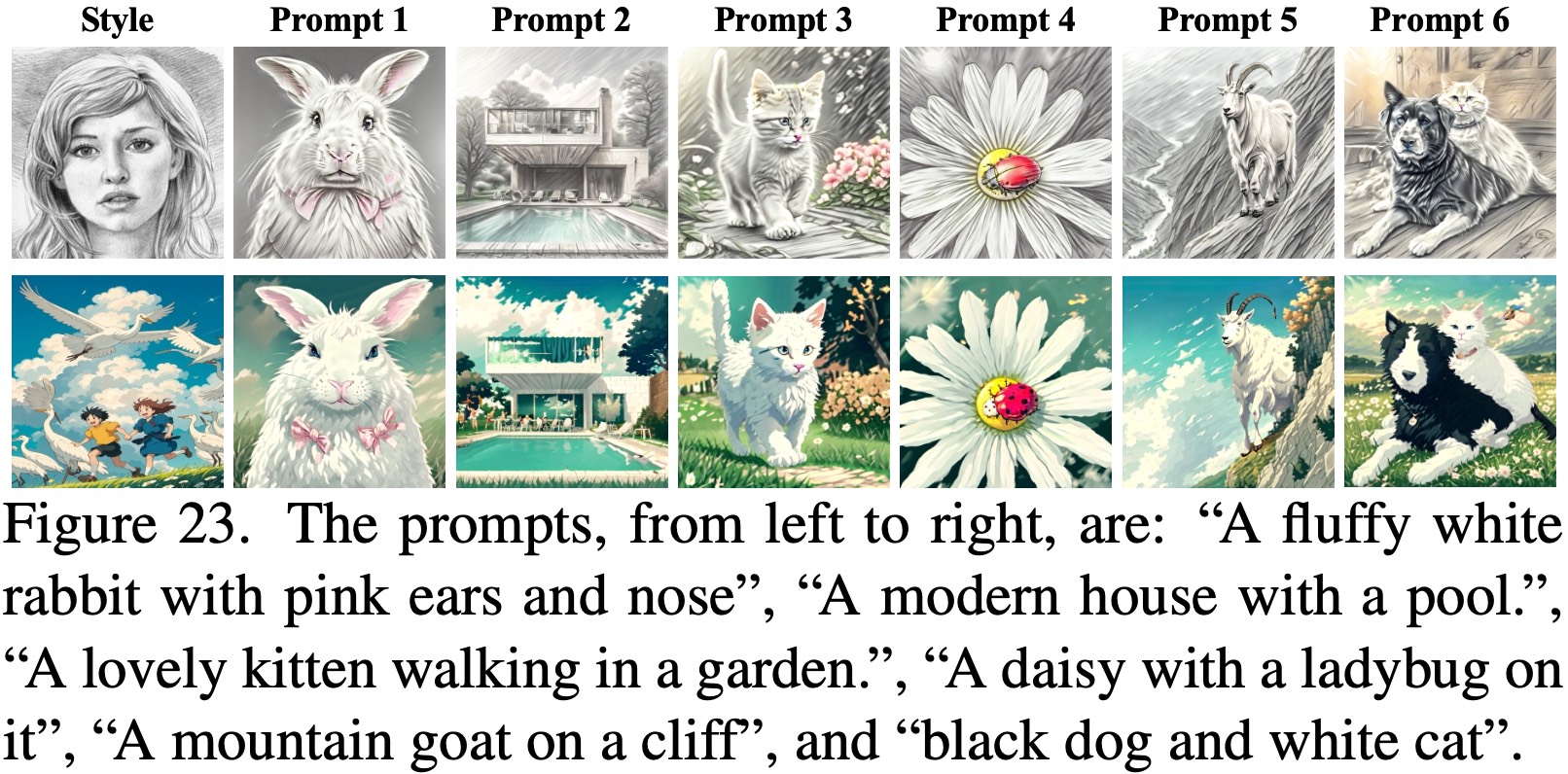

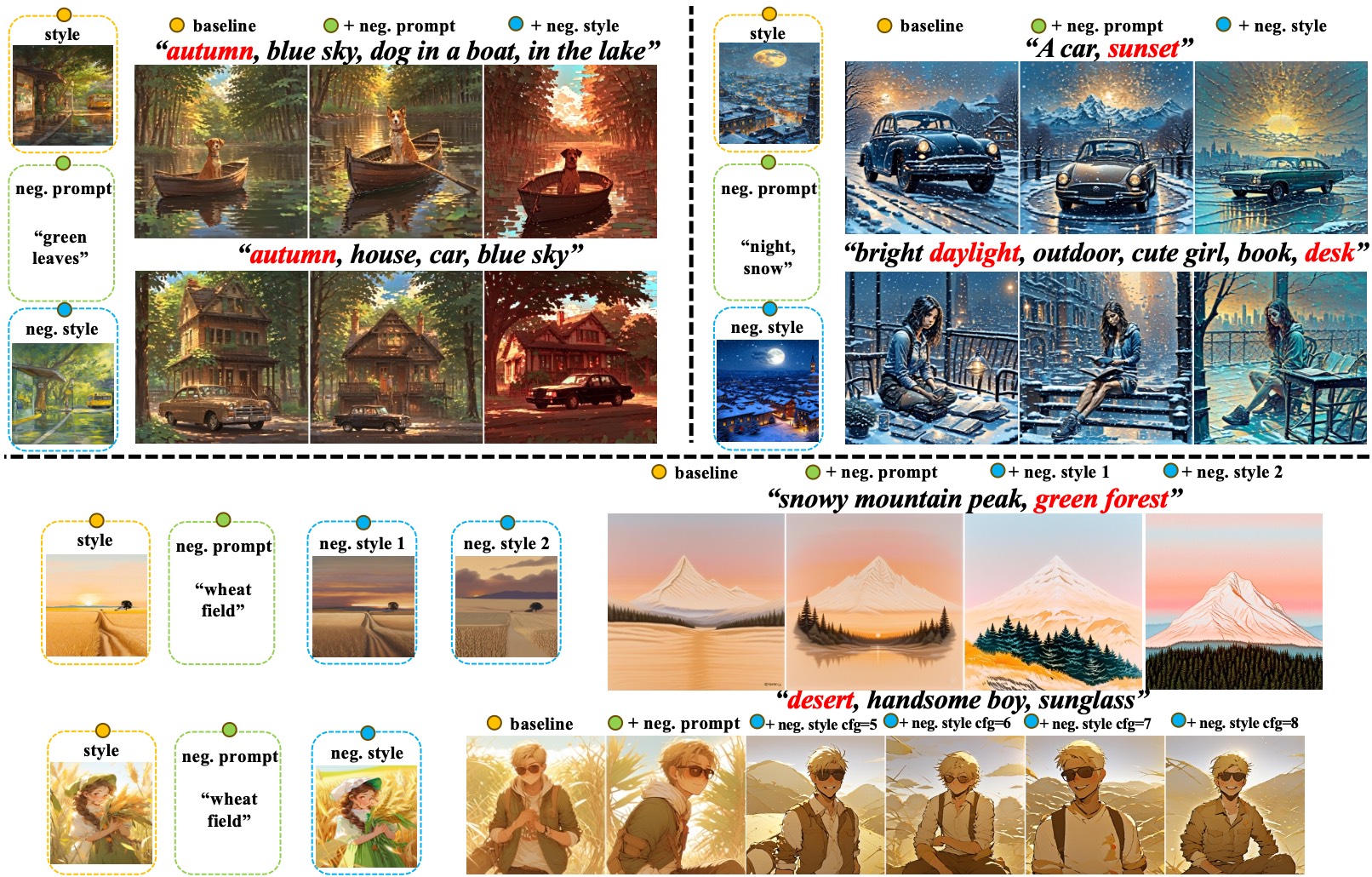

Additionally, the following image shows the result generated from a complex prompt.

Qualitatively: Given a reference image and a text prompt, StyleStudio effectively preserves image style while accurately adhering to text prompts for generation and ensuring the layout stability.

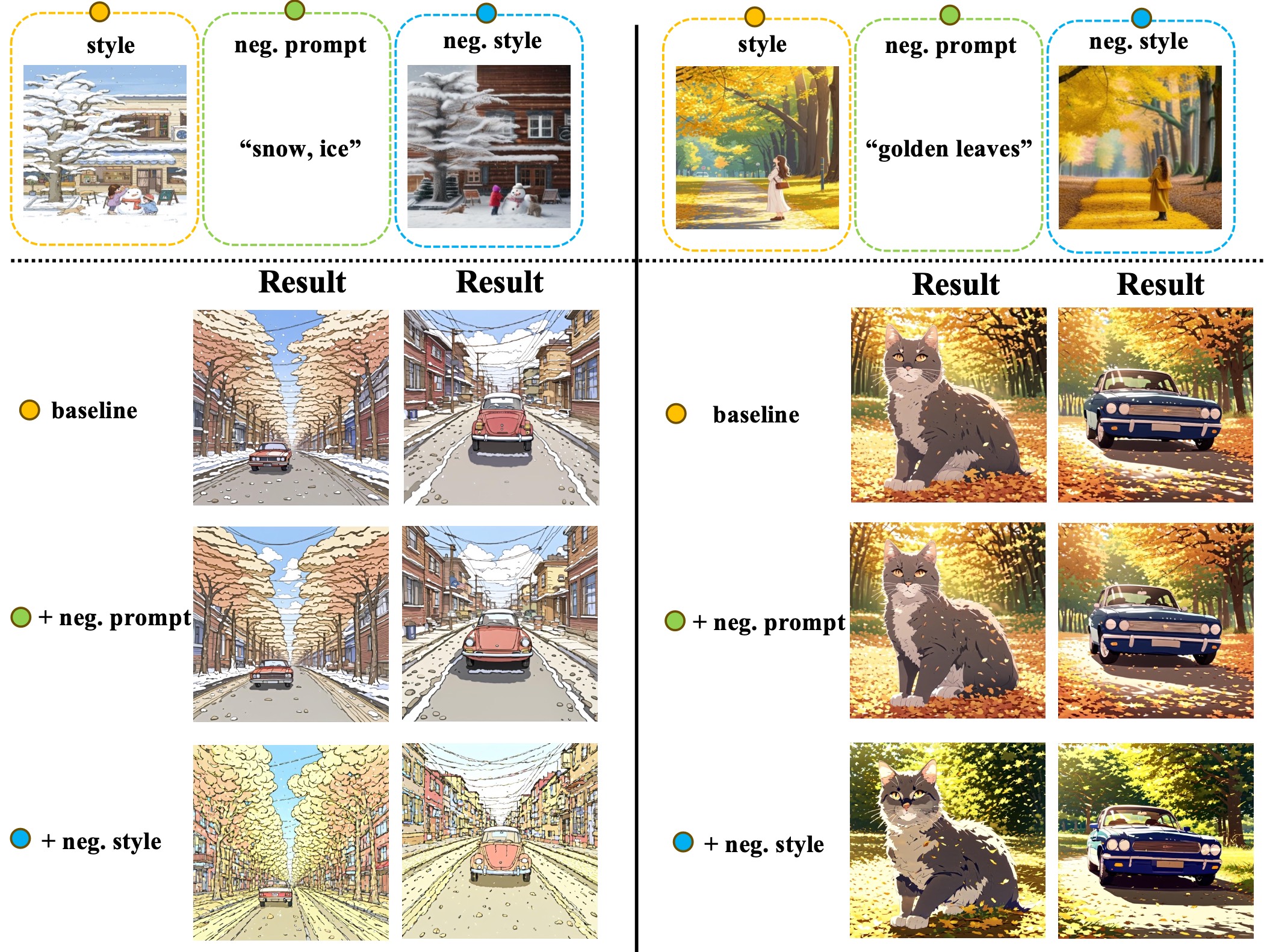

By applying a negative style image, we achieved more effective control, successfully removing these unwanted elements. In the baseline images, unintended style elements such as snow and golden leaves are present, which do not align with the intended style. Adding a negative text prompt allowed for some control over these elements; however, unintended style elements like snow were not effectively mitigated.

Qualitatively: Given a reference image and a text prompt, StyleStudio effectively preserves image style while accurately adhering to text prompts for generation and ensuring the layout stability.

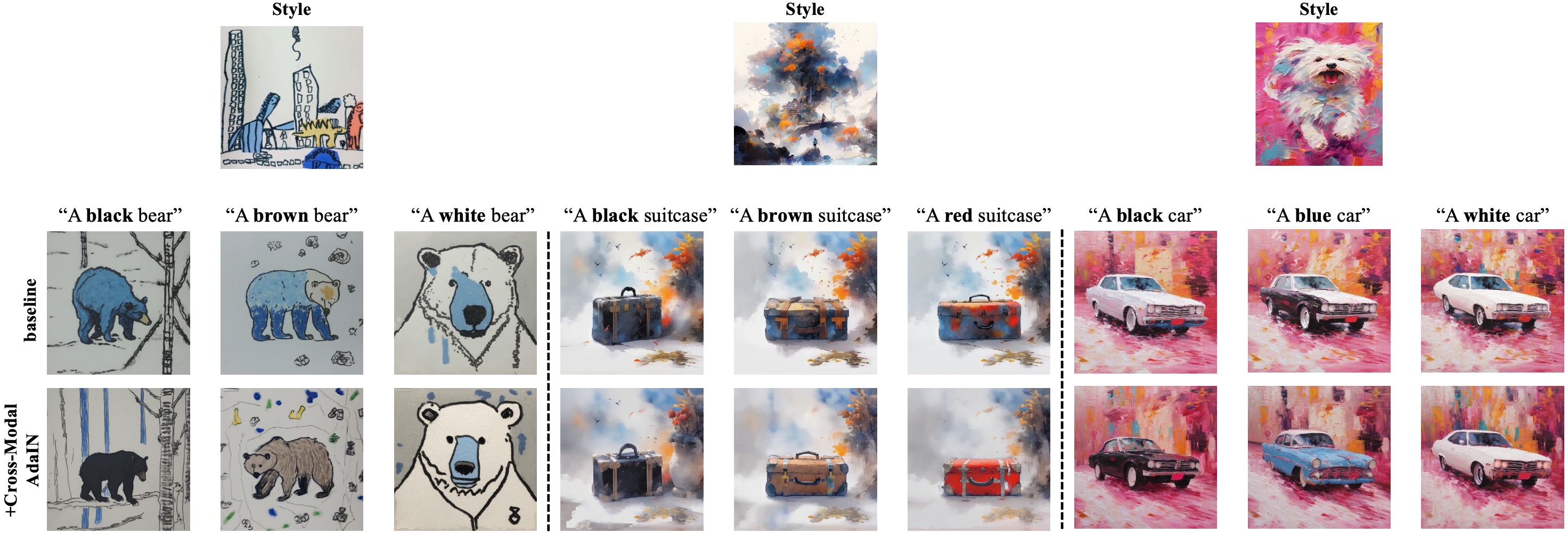

Qualitative results of using Cross-Modal AdaIN in InstantStyle. The results demonstrate that Cross-Modal AdaIN effectively prevents style overfitting. The final generated results consistently align with the textual descriptions.

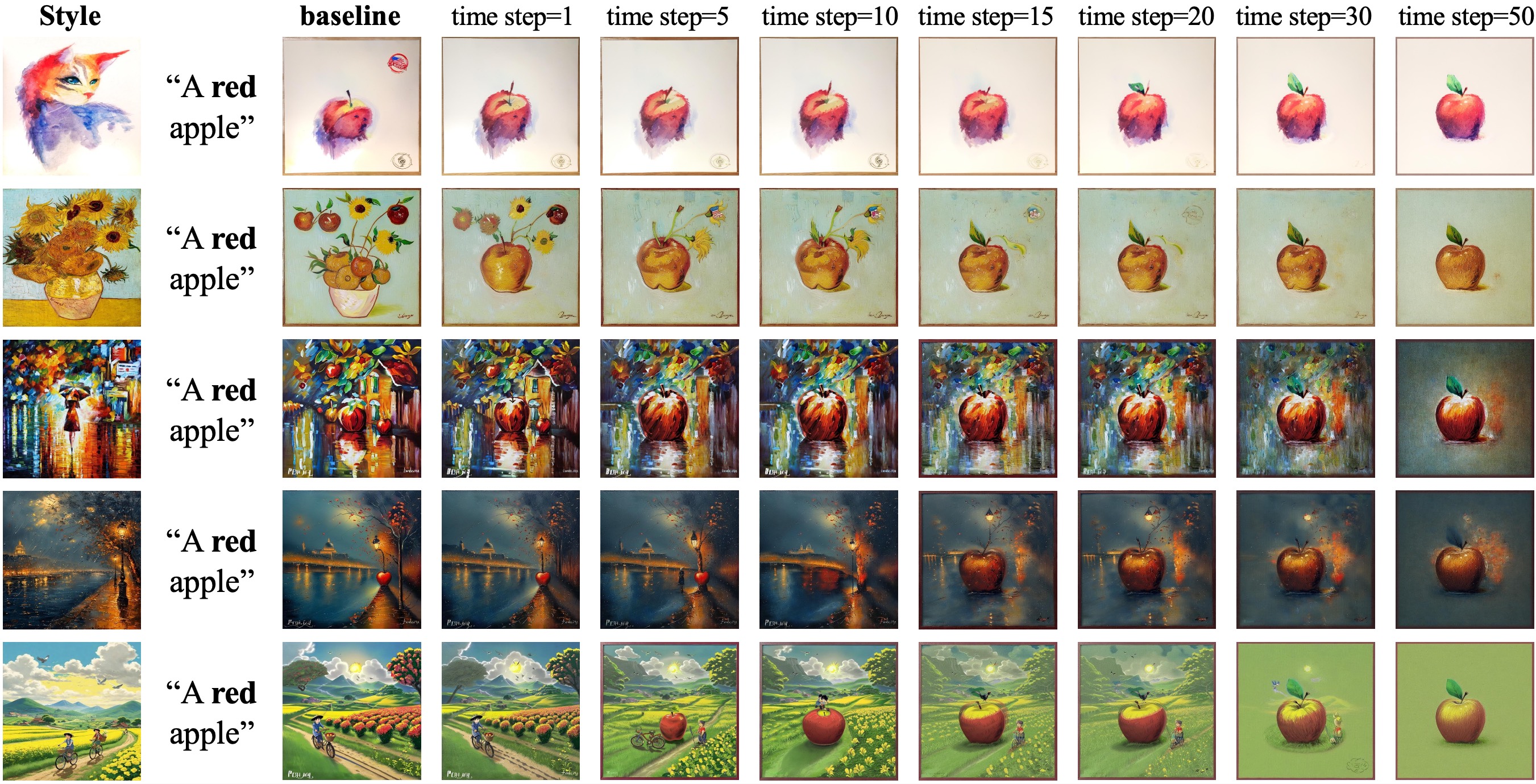

Impact of Teacher Model on StyleCrafter Image Generation. The term "timestep" refers to the number of denoising steps during which the Teacher Model is involved. In addition to ensuring layout stability, the Teacher Model also effectively reduces the occurrence of content leakage when applied to StyleCrafter.

@inproceedings{lei2025stylestudio,

title={StyleStudio: Text-Driven Style Transfer with Selective Control of Style Elements},

author={Lei, Mingkun and Song, Xue and Zhu, Beier and Wang, Hao and Zhang, Chi},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={23443--23452},

year={2025}

},

}